1.0 docker-compose.yml

创建实验环境:

操作系统:CentOS Linux release 7.6.1810 (Core)

内核版本:Linux mcabana-node1 3.10.0-1062.18.1.el7.x86_64 #1 SMP Tue Mar 17 23:49:17 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

docker 版本:Docker version 19.03.8, build afacb8b

docker-compose 版本:docker-compose version 1.25.4, build unknown

要安装elk版本:7.6.1

cerebro 版本:0.8.5

docker-compose 安装三节点的elasticsearch 7.6.1 和 kibana 7.6.1 以及 cerebro es监控插件

构建前先调整个参数,不然会有各种问题

echo 'vm.max_map_count=262144' >>/etc/sysctl.conf

sysctl -p

version: '2.2'

services:

es01:

image: elasticsearch:7.6.1

container_name: es01

environment:

- node.name=es01

- cluster.name=mcabana-cluster

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es_data01:/usr/share/elasticsearch/data

- es_conf01:/usr/share/elasticsearch/config

ports:

- 9200:9200

networks:

- elastic

es02:

image: elasticsearch:7.6.1

container_name: es02

environment:

- node.name=es02

- cluster.name=mcabana-cluster

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es_data02:/usr/share/elasticsearch/data

- es_conf02:/usr/share/elasticsearch/config

networks:

- elastic

es03:

image: elasticsearch:7.6.1

container_name: es03

environment:

- node.name=es03

- cluster.name=mcabana-cluster

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- es_data03:/usr/share/elasticsearch/data

- es_conf03:/usr/share/elasticsearch/config

networks:

- elastic

kibana:

image: kibana:7.6.1

container_name: kibana

ports:

- 5601:5601

environment:

SERVER_NAME: "kibana.example.com"

SERVER_HOST: "0.0.0.0"

XPACK_GRAPH_ENABLED: "true"

XPACK_MONITORING_COLLECTION_ENABLED: "true"

ELASTICSEARCH_HOSTS: "http://es01:9200"

I18N_LOCALE: "zh-CN"

external_links:

- es01:es01

volumes:

- kibana_conf:/usr/share/kibana/config

networks:

- elastic

logstash:

image: logstash:7.6.1

container_name: logstash

ports:

- 5044:5044

- 9600:9600

environment:

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

external_links:

- es01:es01

volumes:

- /etc/localtime:/etc/localtime

- logstash_conf:/usr/share/logstash/config

- logstash_pipeline:/usr/share/logstash/pipeline

- logstash_input:/usr/share/logstash/infile

networks:

- elastic

cerebro:

image: lmenezes/cerebro:0.8.5

container_name: cerebro

ports:

- "9800:9000"

command:

- -Dhosts.0.host=http://es01::9200

networks:

- elastic

volumes:

es_data01:

es_data02:

es_data03:

es_conf01:

es_conf02:

es_conf03:

kibana_conf:

logstash_pipeline:

logstash_conf:

logstash_input:

networks:

elastic:

driver: bridge

docker-compose up 直接运行即可。

kibana 7.* 版本中,配置文件添加I18N_LOCALE: "zh-CN",可直接使用中文。

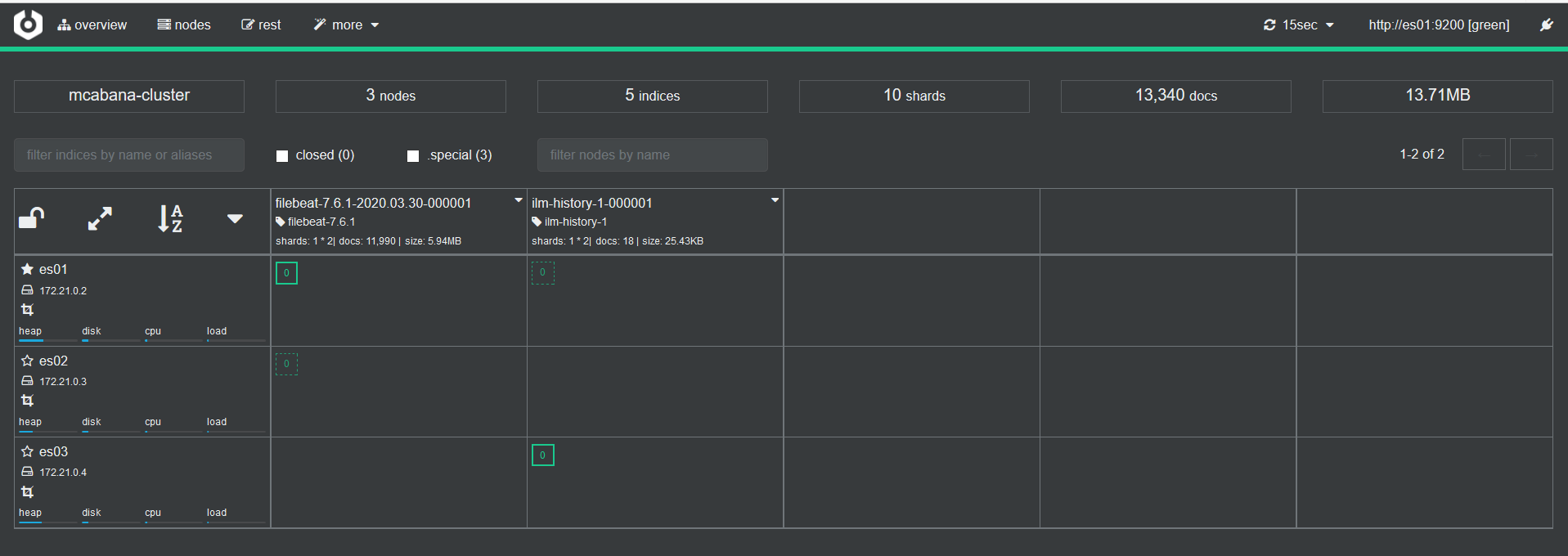

2.0 cerebro

浏览器打开9000端口就是cerebro

3.0 部署logstach 并导入测试数据集

编辑文件/usr/share/logstash/pipeline/logstash.conf

input {

file {

path => "/usr/share/logstash/infile/ml-latest-small/movies.csv"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

csv {

separator => ","

columns => ["id","content","genre"]

}

mutate {

split => { "genre" => "|" }

remove_field => ["path", "host","@timestamp","message"]

}

mutate {

split => ["content", "("]

add_field => { "title" => "%{[content][0]}"}

add_field => { "year" => "%{[content][1]}"}

}

mutate {

convert => {

"year" => "integer"

}

strip => ["title"]

remove_field => ["path", "host","@timestamp","message","content"]

}

}

output {

elasticsearch {

hosts => "http://es01:9200"

index => "movies"

document_id => "%{id}"

}

stdout {}

}

3.0.1 下载测试数据集

http://files.grouplens.org/datasets/movielens/ml-latest-small.zip

然后解压放到 /usr/share/logstash/infile/下,启动logstash

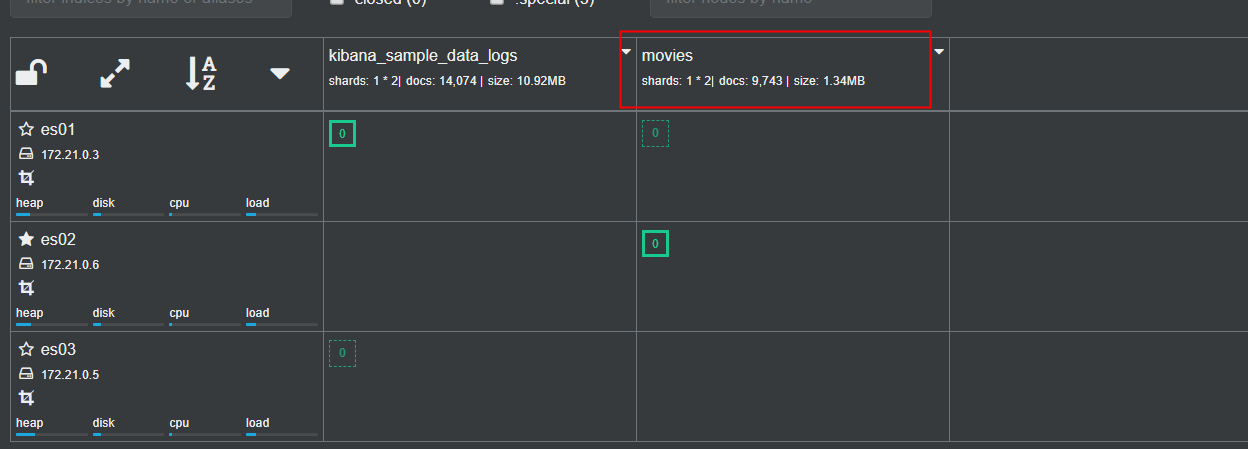

打开 cerebro 可以看到新创建了一个索引 ,导入数据成功

logstash -f example.conf 即可启动

这些导入的数据集用于后面对 ElasticSearch 深入理解实验使用。

https://www.hugbg.com/archives/1805.html

2020-04-09 11:35 上午 1F

沉舟侧畔千帆过,病树前头万木春。 —酬乐天扬州初逢席上见赠